So here I am on my way to University for one of the last times!

I have everything and am happy with what I have produced. Of all the things I wanted to do, I managed them all. The only thing that is unfortunate is that I couldn't find time to do a final update on rendering/lighting with screen shots!

My final day consisted of identifying last minute frame by frame checking of my shot. Most of the bad stuff was to do with the 3D holdout. I had to tree align the bike with the plate for a handful of frames. To do this I animated some vertices for the sake of getting a certain result. I then re ran these frames through my grading pipeline, tweaking the comp accordingly. I was also busily rendering video files of various formats, as well as burning DVDs and preparing artwork for insertion.

Once I arrive at university I must print and bind my blog, as well as printing my written report. This can then be handed in to the office along with my diary, a DVD in a case and a hard drive containing the various project files.

i am ross

In this blog I have set out to collect all the things that have inspired me about, or are relevant to my final year CGT project. I recommend viewing this blog in chronological order, by clicking the link below.

Monday, 13 May 2013

Sunday, 12 May 2013

Final year project update 4

In this update I would love to say more about how I lit and rendered my scene, but I am organizing my submission instead. I would like to say that of my lighting experience the best progressions came around when I began to use an HDR for the fill light. Having an area light emit diffuse gave me nice falloff, while having the directional cast shadows and emit specular was good for render time. I have never done this before and was some advice I picked up when I sought advice from a professional lighting junior I know. Separating the AO but combining the specular was a result of the following...

A main issue I had was with the depth of field in my shot. I haven't mentioned it until now because I found a solution fairly quickly. Normally I would use a depth pass from maya in nuke to defocus in Z. In this instance because of small leaves and their overlapping at different depths a depth pass was really not working. I couldnt afford to sample high enough to get the edges to line up. I did something I had never done before. I used Maya physical lens shader on my shot camera. This renders out a burnt it depth defocus. It added quite a bit to my render time, but it worked in the comp, especially as my comp's softening helped the undersampled areas.

Next time I should render out several passes of particles, at incremental depth slices, or individually (with Ids maybe) allowing the defocus plugin more chance of looking nice.

After all I decided that the leaves looked very stiff and rigid and did in fact need to deform, so I cut new faces into them and I used two wave deformers to give the instanced geometry some curvy animation. This looked a lot better and was simple and quick last minute addition.

A main issue I had was with the depth of field in my shot. I haven't mentioned it until now because I found a solution fairly quickly. Normally I would use a depth pass from maya in nuke to defocus in Z. In this instance because of small leaves and their overlapping at different depths a depth pass was really not working. I couldnt afford to sample high enough to get the edges to line up. I did something I had never done before. I used Maya physical lens shader on my shot camera. This renders out a burnt it depth defocus. It added quite a bit to my render time, but it worked in the comp, especially as my comp's softening helped the undersampled areas.

Next time I should render out several passes of particles, at incremental depth slices, or individually (with Ids maybe) allowing the defocus plugin more chance of looking nice.

After all I decided that the leaves looked very stiff and rigid and did in fact need to deform, so I cut new faces into them and I used two wave deformers to give the instanced geometry some curvy animation. This looked a lot better and was simple and quick last minute addition.

Saturday, 11 May 2013

Retiming a clip for edit's sake!

I have altered my edit to account for my VFX clip's CG falling short of the inital clip's length. I knew I had not tracked the duration of the clip from the inital edit. My thoughts were to take over with a 2D track at a certain point. That, or alter the edit to suit. I have found that this means the successive clip needs to be lengthened to %121 percent of the original.

I welcomed this opportunity to see how having very high frame rates aids retiming software. Having written out a DPX sequence as before I tried switching between frame blending and motion estimation on Nuke's Kronos and OFlow nodes. I suspect the best result will frame blending on Kronos...

... and I was right. Frame blending looked rubbish becuase of the motion on the action. Artefacting through motion estimation was higher on OFlow, so I favoured Kronos' result. Very convincing. Much better than any of your twixtor crap. :p

Just need to write it out and replace the clip in premiere. Happy days.

Testing DVD burns!

Had to try to meet the rather annoying requirements of the project submission. I have to experiment with different pal and hd formats, with different codecs to achieve the best DVD result. Read a great website HD footage to DVD conversion. The result is yet to be confirmed but thought I'd point out more learning and experimenting I have undertaken.

Friday, 10 May 2013

Final year project update 3

To create the leaves and particle simulation I used regular Maya particles. I used a volume axis field to give a direction and some turbulence to the particles.

The rotation comes from a per particle expression. I wanted the leaves to scale up to 1 from 0 as they were born so I attached a ramp to a custom per particle attribute. There are creation expressions for the scale and rotation per particle and a runtime before dynamics expression to keep them rotating.

The rotation comes from a per particle expression. I wanted the leaves to scale up to 1 from 0 as they were born so I attached a ramp to a custom per particle attribute. There are creation expressions for the scale and rotation per particle and a runtime before dynamics expression to keep them rotating.

This ramp represents the particles scale over their lifespan.

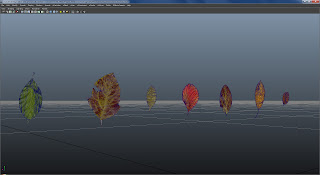

Once I had established a preliminary animation/simulation I needed to create some leaves.

I acquired some textures from a range of sources including my garden and the internet. I 'cut them out' and color corrected them in photoshop.

I didn't want to model the leaves individually and had some bad experiences with rendering leaf textured cards with transparency in mental ray for all of the passes. I did some digging and discovered a Maya feature called 'Texture to geometry'. After toying with this feature I got some results I could use. Useless faces were shaded by a shader related to the texture's alpha, so I could select and delete them, leaving me with some weird leaves.

Unless I need these leaves to deform, the topology is acceptable. It is easy enough to remove non border edges and re-cut manually later. I wanted to work this way as I knew I may change the leaves later.

Following this all was required to do to move on was use an instancer to assign leaves to the individual particles. I used another creation expression per particle to choose random leaves from the instancer index. I duplicated leaves I wanted to favour and balance the appearance of the different coloured leaves.

All that remains for this section is to infinitely tweak and improve my animation! I have a good idea about the composition with the leaves and the empty space in the frame is intentional breathing space for my CG leaves!

In my next blog entry I will go over the lighting and probably the rendering too.

Tuesday, 7 May 2013

Final year project update 2

Having tracked my camera and created a scene for it to move around in my next 3D step was to create a particle simulation, mimicking the motion of a cloud of leaves. The idea is to emit the leaves from the bicycle geometry, creating the impression that the dissipation of the bike in 2D is matched with the areas the leaves emit from. I neatly modelled my bike with simple geometry. This was compared with a photograph and some precise measurements. I did not want complicated, perfect geometry, I wanted something light and easy to maintain edits upon.

Once imported into my scene I began the process of hand matchmoving the BMX to the plate. I used my scene's geometry as a guide as to where in space I would expect the BMX to be. Eventually I began to get a result that I was pleased with. I was matching the BMX frame and rear wheel to the plate, then adjusting the handlebar rotation as well. This was a long process across ~330 frames.

Once complete I setup a projected B&W ramp, parented to the animated BMX. This would act as an emission texture. I tested it with plain particles and eventually got it working right. This ramp allowed me nice control over the 'wipe' emission I wanted, from front of bike to back. I had some difficulty at this point as I needed the separately animatable bars and frame of the bike to be a single mesh for emission purposes, so I combined them and left the history alone. I opted to leave the projection and just planar project the UVs, applying the ramp as a texture. Same result as projection but much cleaner for my purposes.

Once I had reached a stage where I could emit particles from a texture, the next task was to craft the simulation and some leaves to instance. I will outline these steps in my next blog entry.

Once imported into my scene I began the process of hand matchmoving the BMX to the plate. I used my scene's geometry as a guide as to where in space I would expect the BMX to be. Eventually I began to get a result that I was pleased with. I was matching the BMX frame and rear wheel to the plate, then adjusting the handlebar rotation as well. This was a long process across ~330 frames.

Once complete I setup a projected B&W ramp, parented to the animated BMX. This would act as an emission texture. I tested it with plain particles and eventually got it working right. This ramp allowed me nice control over the 'wipe' emission I wanted, from front of bike to back. I had some difficulty at this point as I needed the separately animatable bars and frame of the bike to be a single mesh for emission purposes, so I combined them and left the history alone. I opted to leave the projection and just planar project the UVs, applying the ramp as a texture. Same result as projection but much cleaner for my purposes.

Once I had reached a stage where I could emit particles from a texture, the next task was to craft the simulation and some leaves to instance. I will outline these steps in my next blog entry.

Tuesday, 9 April 2013

Final year project diary update

I am posting this as an update to my final major project progress diary, but have opted for a blog entry as I need to use a lot of screenshots and I don't have a printer! I will be providing a step by step account of how I have achieved my work so far along with any issues i faced, and the learning i had to undertake to overcome the issues.

Once I have made my select from the rushes I captured on the day of the shoot I exported from Premiere a full quality, linear colorspace, DPX sequence of the frames that comprise the VFX shot I am working on for my project.

The next step was to track the camera. I knew we used a 50mm lens for this particular shot so I entered this along with our camera's sensor size. I found this information online and thankfully my camera had a full frame 35mm sensor which standard measures 24.89mm x 18.67mm as a standard. I then tracked 8 solid points in my sequence (rec709 colorspace) and fed them into the camera tracker. The result was encouraging! I exported the resulting camera and pointcloud as an FBX.

After tracking the camera I had to layout a scene to help verify my track and also matchmove it within the scene. I acquired reference images of the location, most usefully the aerial view from google maps. I made some curves in maya that roughly matched the profiles of the ramps, and aligned these along with primitive shapes to match the contours and buildings on location that feature in the shot. I decided not to take the required time to perfect the model to the plate but I think it is around 90% of the way there as it is.

Once I have made my select from the rushes I captured on the day of the shoot I exported from Premiere a full quality, linear colorspace, DPX sequence of the frames that comprise the VFX shot I am working on for my project.

Following this I imported the sequence into Nuke and configured the project, as well as locking the range to the frames where work is required. This prevents any unnecessary scrubbing/rendering/caching.

After tracking the camera I had to layout a scene to help verify my track and also matchmove it within the scene. I acquired reference images of the location, most usefully the aerial view from google maps. I made some curves in maya that roughly matched the profiles of the ramps, and aligned these along with primitive shapes to match the contours and buildings on location that feature in the shot. I decided not to take the required time to perfect the model to the plate but I think it is around 90% of the way there as it is.

This is a technique I learnt from The Art and Technique of Matchmoving and was fantastically useful to help me position my camera and also came in very handy when hand matching a 3D BMX to the plate, ensuring it made sense in the scene. I proceeded to 'scooch' the camera in to position trying to align the wireframe geometry to its corresponding piece of the image. All the while I was bearing in mind the information I had from set and referred to a photograph of the camera on the day as a 'sanity check'. I also used the locators in my point cloud to see whether the things I tracked in the first place made sense as to where they lined up with the scene in 3D space. They did. PERFECTLY!!!!

Subscribe to:

Posts (Atom)